Making evidence accessible, accurate and actionable

An overview of the EEF)’s Teaching and Learning Toolkit summarises what I see as the potential contribution it makes to research-informed teaching and to professional development and learning in schools – the challenge of making evidence accessible and accurate in a way that is sufficiently actionable to ensure improvement.

Publishing summaries of evidence doesn’t automatically translate into schools improving their teaching practices!

Sometimes people misinterpret what you intended. I’ve heard accounts of schools devoting extra hours to marking pupils’ work under the impressions this would deliver effective feedback. Marking pupils’ work focuses on feedback delivered and the efforts of the teacher, but not whether the feedback was received, understood and acted upon by the learner.

One local authority in Scotland attempted to use the information in the Toolkit as justification for increasing class sizes, ignoring the finding that pupils in larger classes tend to do a little bit less well, on average. The overall argument is that reducing class sizes is only moderately effective and very expensive, so it is not cost-effective as a strategy to help learners. Challenges like these are at the heart of research-informed teaching.

The EEF’s Teaching and Learning Toolkit

The Toolkit was originally funded by the Sutton Trust to summarise evidence from educational research to challenge thinking about effective spending for what was then the new pupil premium policy in England. This policy aimed to increase spending in schools to benefit children and young people from disadvantaged backgrounds. The Toolkit was subsequently adopted by the EEF and is now in widespread use in England, with just under two thirds of headteachers saying they consult it1.

Similar approaches to evidence synthesis and translation, as well as adoption of evidence in policy-making, are growing in popularity, reflecting a global movement towards more effective engagement with research and evidence.

In the Toolkit a number of educational approaches or programmes are listed, and categorised in terms of the evidence from research for their effectiveness, the cost and average impact. The aim was to create something like a ‘Which?’ consumer guide for education research, covering topics of interest and relevance to policy and practice.

The UK Government has cited the Toolkit as an exemplar for its network of ‘What Works’ centres2. This commitment to spend public money on the most effective practices is important and impressive, but the ‘What Works’ label can imply a certainty that I believe oversimplifies what the research actually means. Research can only tell us what has worked in the past, not what will work in the future. I argue further that it can only offer indications of what may work under certain conditions.

The Toolkit website (see Figure 1) summarizes the messages across different areas of education research with the aim of helping education practitioners make decisions about supporting their pupils’ attainment in schools. It is designed to be accessible as a web-based resource for practitioners and other educational decision-makers. It combines quantitative comparisons of impact on educational attainment in an approach sometimes called ‘meta-synthesis’. This is where the findings from different meta-analyses are combined to give an overall effect.

This is similar to, and was inspired by, John Hattie’s approach in Visible Learning3. The aim of the synthesis is to provide information and guidance for practitioners who are often interested in the relative benefit of different educational approaches, as well as information from research about how to adopt or implement a specific approach. What distinguishes the Toolkit is the inclusion of cost estimates for the different approaches to guide spending decisions along with an indication of the robustness of the evidence in each area.

Bananarama: it ain’t what you do it’s the way that you do it

The Bananarama principle is named after Bananarama and Fun Boy Three’s 1982 hit single ‘It Ain’t What You Do’. Most people smile at the joke, but even if they don’t the song is something of an earworm and tends to stick in your head. It can also be seen as an important principle for research-informed teaching when acting on the findings of research. The initial point was a simple one: it wouldn’t be what schools spent, but the way that they spent the pupil premium that would get results5The deployment of teaching assistants, reducing class sizes and ability grouping are all examples of the Bananarama.

The principle underscores the power but also limitations of evidence in helping a teacher decide what to do in a classroom: how an approach is implemented is vital and just as important as its content. This principle reflects the spread of findings in each area of the Toolkit. In the meta-analyses there are examples of successful and unsuccessful approaches. The overall average gives you an indication of what typically happens when an approach is adopted. Evidence can be helpful, but it is never going to be enough. Put more formally Bananarama also reflects a statistical argument.

The range of effects within a Toolkit strand is greater than the difference between neighbouring strands. This suggests that although people have been successful (or unsuccessful) on average when adopting an approach there is also a spread of effects that we should take into account. The Toolkit only tells us what has worked from other studies “on average”, and contains all of the statistical risks of averaging averages involved in this kind of synthesis.

I think of this evidence as providing practitioners with a “good bet” for what is likely to be successful or unsuccessful in a new context, based on how large the average effect is, represented as the average months progress icon, as well as the spread of the effects, which gives an idea of the riskiness of the bet.

We also have to remember that average impact in these studies is based on a comparison with a control group or “counterfactual” condition6. In averaging the effects, we “average” the comparison conditions. As we become more certain that something is likely to be effective, we become less certain about what it is actually better than.

This is important because an already highly effective school is likely to be better than the “average” comparison or control school. Any typical gains found in research may well be harder to achieve in an already successful classroom or school. Overall, the larger the effect and the narrower the spread of effects, the more likely the average is to be useful other contexts. The smaller the average effect and the wider the spread of effect the riskier it becomes

You need to know what to stop as well as what to start

This line of reasoning also suggests that we need to be clear about what we should stop doing. Whenever schools adopt something new, they must stop doing something else. There is little or no spare time in schools. We rarely reflect on this, so it can be hard to tell what gets squeezed out when we start something new.

Research can also help us think about this, by providing information about things that have not worked, or tend not to work so well, on average. Research like this has clear limitations in its specific applicability. It tells what’s worked, on average and over there, for someone else, it does not tell us what will work here. It is only once we understand these limitations that we can use it appropriately.

Partnership between research and practice

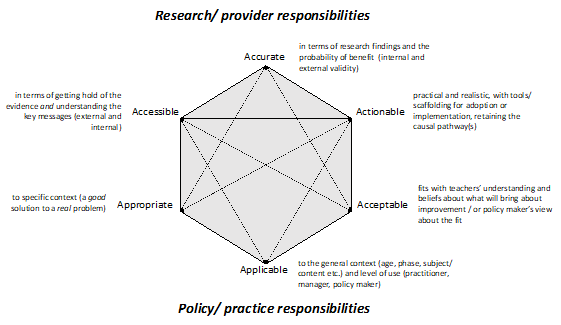

My work in developing the Toolkit with the EEF and trying to communicate what I think it means to teachers has led me develop a model which represents my understanding of this challenge. Some of the responsibilities in the model are from the perspective of the researcher (see Figure 2). These involve the research being accessible, accurate and

actionable. This immediately sets up a series of tensions for the researcher, represented by the connecting lines in the diagram (Figure 3), to summarise findings accurately but succinctly in a way which educational practitioners can understand and put into practice. Accuracy refers mainly to how findings are summarised in relation to what was found (answering the question ‘did it work there?’ or addressing the internal validity of the study).

Understanding how research might be relevant in a new context is problematic in education. This is not least because we have almost no replication in education research and the samples of schools, teachers and pupils are not randomly selected. It is therefore hard to estimate how likely it is that an approach might be equally successful somewhere new. We know that accessibility is a key issue both in terms of getting hold of research evidence, but also in terms of understanding it.

One of the main drivers behind the development of the Toolkit website was to create an accessible but accurate resource for education professionals. Academic journal articles are constrained by genre, form and the history of the discipline, and are rarely an easy read for the busy teacher.

How you distil findings into actionable steps is even more challenging. I’ve likened this before to picking the strawberries out of the jam. You can sometimes see that the fruit is there, but it is so boiled and crystallised by the meta-analytic jam-making process that they no longer look or taste like strawberries!

Applicable, appropriate and acceptable

From the practice perspective, I believe there are also responsibilities in terms of the research being applicable, appropriate, and acceptable. A good fit between research evidence and the practice context is essential, but I think this needs to be the responsibility of the teacher or school as they best understand how to meet the educational needs develop the capabilities of their pupils. It is important to know that it is likely to be applicable in terms of subject, age and approach.

One of my worries about research from fields other than education is being clear about how the findings might apply in a classroom and a specific curriculum context. Psychology research on motivation, or neuroscience research into brain function are not directly applicable, though the findings often appear seductively suitable7. When these findings are tested in the classroom, they may not always have the effects expected8.

It is important to identify whether research is appropriate for the particular teacher and the pupils involved. To increase the chances of it being appropriate I think it should meet an identified need or a perceived problem, rather than being plucked from the top of a list of effective strategies, or chosen at random from successful research findings. Identifying a problem or challenge is more likely to create a match between the research context where it successfully made a difference and the new setting. It is likely to replace problematic practice that needs improvement, rather than replacing something at random, which may actually be working well.

One way of looking at the Toolkit is that it is like an encyclopaedia of solutions to educational challenges which others have found in the past. The extent of the impact and distribution of the effects gives you a probability of how likely it is to be useful in the future. The problem is that the actual questions to which we have all of these solutions are no longer attached as they are buried in the original research. It is therefore important to consider whether a particular research-based practice is appropriate as a solution to the challenges a particular school or teacher faces.

The final practice dimension is how acceptable the findings are. At one level, they have to be educationally acceptable. Some kinds of behavioural change may be very efficiently achieved with pain or discomfort, but they would not be educationally or ethically acceptable.

The next level is more difficult to address. To stand a chance of being successful in supporting change, research findings have to be acceptable to the teachers involved. If the findings conflict with deeply held beliefs about effective practice then they may either be rejected and not attempted, or even adopted resentfully and set up to fail. I have always argued that, as a classroom teacher, if you presented me with a robust and rigorously researched reading intervention which was consistently successful when evaluated, that I could guarantee to make it fail in my classroom. Teachers are the gatekeepers of their own practice.

There is an irony here that I have experienced. The teachers who are to be more open to research-based approaches often seem to be the ones who are already highly effective. They actively seek to improve their practice and to increase the range of strategies they have to address teaching and learning challenges. They are eager to try out approaches backed by research.

By contrast, I feel that sometimes those I thought might benefit most from trying out such strategies are perhaps the ones most likely to find them unacceptable or be unwilling to try something new. There is a clear role her for professional development here to explore and understand teachers’ beliefs when supporting changes in classroom practice.

Shared responsibility built on professional expertise

We need to be clear about the limitations as well as the potential benefits of research-informed practice. Research can understand patterns of effects and help us identify ‘good bets’ based on the accumulation of findings across studies.

The applicability of these findings is then problematic as we cannot be sure how these general findings apply to a specific context or a particular class of learners. The findings are likely to be helpful, on average, across a large number of classes and schools, but this may not help an individual teacher or a particular school to identify what will work for them.

This suggests that research-informed practice should be a partnership between researchers and practitioners where each partner takes responsibility based on their professional expertise. The Toolkit aims to offer an overview of the relative benefits of different areas of education research. It does not, and cannot give precise recommendations for research-based practices to an individual teacher.

Top Tips

- Do start with a problem or a challenge you want to solve. You are more likely to find a solution from research which will help improve learning outcomes for children and young people.

- Think about what you will stop doing. How will you create the space to do what you think will help?

- Identify a solution from research that is appropriate and applicable to your context.

- Remember Bananarama: it ain’t what you do it’s the way that you do it! You will need to commit to the solution you have chosen and watch out as you develop it to make sure it is actually helping.

Steve Higgins is Professor of Education at Durham University. A former primary school teacher he is passionate about the use of evidence from research to support decision-making in schools. He is the lead author of the EEF’s Teaching and Learning Toolkit and is interested in understanding how best to engage with policy-makers and education practitioners to use research to support effective learning in classrooms.

References

- National Audit Office (2015) Funding for disadvantaged pupils: Report by the Comptroller and Auditor General London: National Audit Office.

- Cabinet Office (2013) ‘What Works Network’, www.gov.uk/guidance/what-works-network

- Bowers, J. S. (2016). The practical and principled problems with educational neuroscience. Psychological Review, 123(5), 600.

- Education Endowment Foundation (2020) The Teaching and Learning Toolkit London: EEF https://educationendowmentfoundation.org.uk/evidence-summaries/teaching-learning-toolkit

- Hattie, J.A. (2008) Visible Learning: A synthesis of over 800 meta-analyses relating to achievement. London: Routledge.

- Higgins, S. (2018) Improving Learning: Meta-analysis of intervention research in education. Cambridge: Cambridge University Press.

- Major, L.M. & Higgins. S.E. (2019) What works? Research and Evidence for Successful Teaching London: Bloomsbury 8 Lazowski, R. A., & Hulleman, C. S. (2016). Motivation interventions in education: A meta-analytic review. Review of Educational Research, 86(2), 602-640.

Register for free

No Credit Card required

- Register for free

- Free TeachingTimes Report every month